Illustrating Geographical Distributions and Describing Populations Using Data from the U.S. Census Bureau

In a previous post I give an example and step-by-step instructions for the geocoding process (converting street address locations to lat/long coordinates). In another previous post I give an example and step-by-step instructions on how to use QGIS to illustrate the spatial distribution of geocoded addresses as a point and choropleth map as well as how to perform a ‘spatial join’ that will identify each location with an associated geography (using a geo identifier for Census tract, zip code, legislative district, etc – whatever your geographic level of interest).

In the current post, using ArcMap rather than QGIS (though it is the same conceptual process), I provide an example and step-by-step instructions for taking this one step farther and joining actual U.S. geography-based Census demographic data to the address locations file without ever leaving the ArcMap platform.

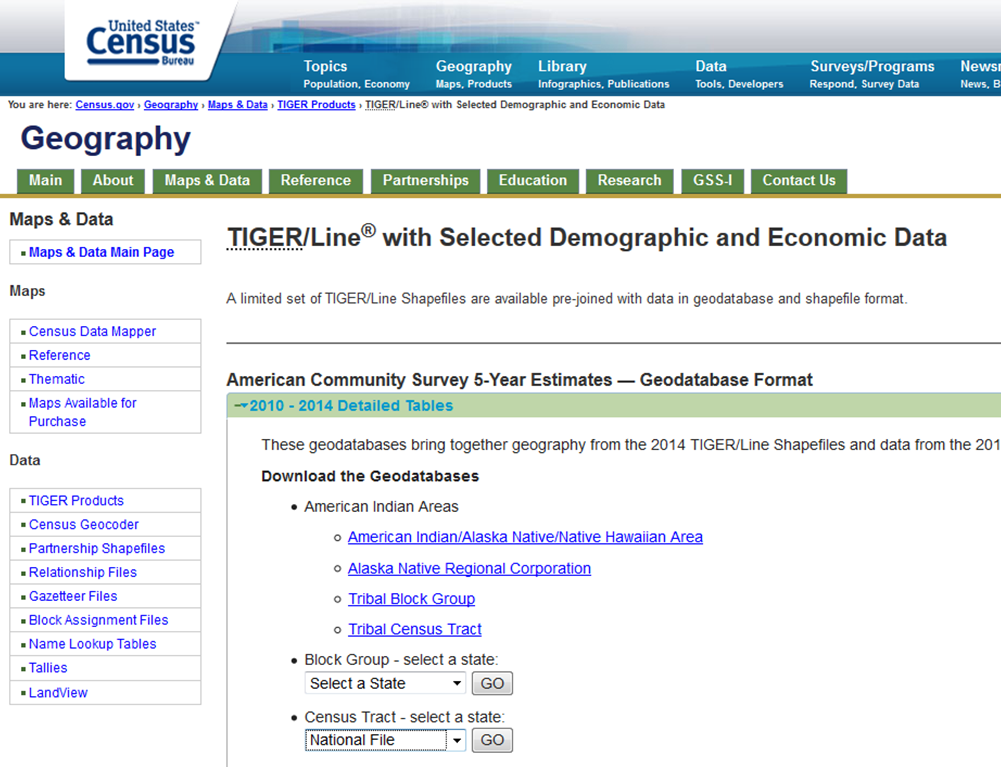

Step 1, Download the Tract Shape and Data File: The U.S. Census Bureau provides downloads that contain both the tract level shape file (the underlying map) along with selected demographic and economic data. These data are derived from the American Community Survey (ACS) and are presented as five-year average estimates since the ACS is carried out through sampling and it requires a five year pooling of the data to arrive at reasonably accurate estimates. In this case I have elected to download the national file that reflects the most recent 2010-14 tract level data estimates. Click here for a direct link to the U.S. Census Geodatabases page.

Continue reading Making Thematic Maps and Census Data Work for IR